The working principle of fingerprint sensors and methods to improve matching performance

Time:2023-07-18

Views:832

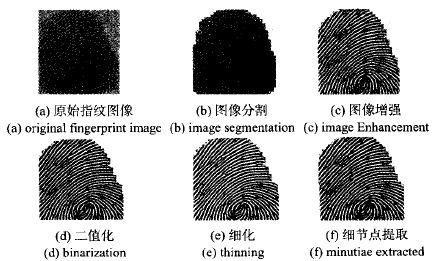

The fingerprint recognition process is similar to the process of identifying all biological features, and is divided into two parts: user registration and feature matching. Firstly, it is necessary to input fingerprint images and process the obtained original images, including image enhancement, segmentation, thinning, binarization, etc. Then, the detailed features of the fingerprint are extracted, with common feature points such as bifurcation points and endpoints. Finally, a template is generated and stored in the system database. Whether it is the process of verification or identification, it is necessary to perform a series of steps such as image segmentation, refinement, binarization, and feature extraction on the fingerprint image of the user to be identified again, generating the same data format as the database template. Finally, compare and obtain the recognition result.

The prerequisite for existing fingerprint recognition algorithms is to collect fingerprints or authenticate using the same fingerprint recognizer. So, many users online can only use the same type of fingerprint recognition device to achieve authentication. Practice has shown that if they switch to different fingerprint recognition devices, the performance of the verification system will be greatly reduced, because fingerprint recognition devices do not have standard exchange regulations.

Due to the different algorithms used for various recognizers, each system that uses fingerprint recognizers requires individual logins, and authentication must use the same recognizer type as the one used during login. This indicates that individuals and systems need to retain multiple different types of recognizers. A universal algorithm to solve the problem of different recognizers has become a meaningful topic in current research. This allows users to use different recognizers on their own computers, facilitating the value of online fingerprint verification systems.

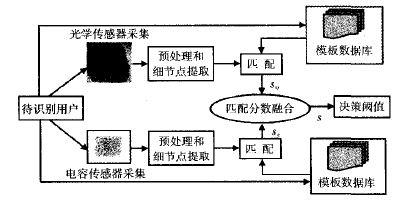

Due to the fact that a certain type of fingerprint sensor is only suitable for verification by the same type of collector, in order to allow more users to use and prevent counterfeit users from attempting to deceive the system, it is necessary to improve the performance of the system through multi-sensor fingerprint fusion. This article proposes a simple fusion strategy to study two commonly used fingerprint sensors - optical sensors and capacitive sensors. Two types of sensors collect two images separately, extract detail points through preprocessing programs, and match them with template fingerprints to obtain two matching scores. Then, these two matching scores are fused using fusion rules to obtain the final matching score. Compared with a single sensor, the performance of the fusion results shows that the system performance has been greatly improved.

1.Proposed fusion framework

Figure 1 shows the framework diagram of the proposed multi-sensor fingerprint verification system. Firstly, the user‘s fingerprint image is collected through optical and capacitive sensors. Then, the image is preprocessed and the features of the fingerprint images collected by two types of sensors are extracted separately. The matching algorithm based on detail points is applied to the optical and capacitive detail point sets, respectively. Therefore, there are two matching scores, and these scores are fused using fusion rules.

Figure 1 Framework diagram of multi fingerprint sensor verification system

1.1 Types and working principles of fingerprint sensors

Due to the many specifications of fingerprint sensors today, there is still no appropriate and unified protocol and standard. At present, the existing sensors in the market mainly include optical sensors and capacitive sensors.

1.1.1 Operating principle of optical sensors

Its basic principle is as follows: press your finger on one side of the glass plane, and install an LED light source and CCD camera on the other side of the glass. The light beam emitted by the LED illuminates the glass at a certain angle, and the camera is used to receive the light reflected back from the surface of the glass. The ridge line on the finger is in contact with the glass surface, while the valley line is not in contact with the glass surface. Therefore, the light shining on the glass surface in contact with the fingerprint ridge line is diffusely reflected, while the light shining on the glass surface corresponding to the fingerprint valley line is fully reflected. Therefore, in the image captured by the CCD camera, the corresponding part of the fingerprint ridge line is darker in color, while the corresponding part of the fingerprint valley line is lighter in color.

1.1.2 Working principle of capacitive sensors

The principle of capacitive sensors is based on the ridges and valleys of the fingers pressed onto the collection head, which generate different capacitors between the skin of the fingers and the chip. The chip obtains complete fingerprints by measuring different electromagnetic fields in space. According to this Aufbau principle, the anti-counterfeiting property of fingerprint can be greatly improved. Forged fingerprints are usually made of insulating materials such as silicone resin or white gelatin, which cannot be imaged on capacitive sensors, making them useless. But the chips of capacitor technology are expensive and susceptible to interference.

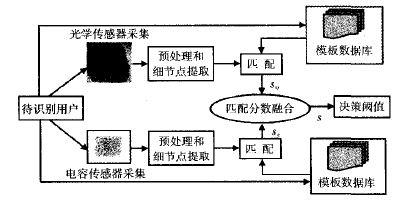

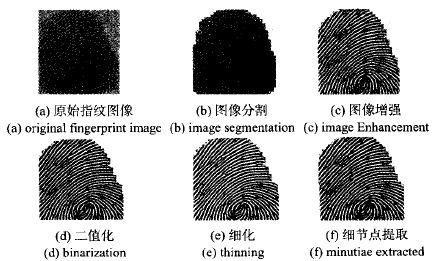

1.2 Fingerprint Image Processing

In the identification process, first of all, the fingerprint J is collected by the fingerprint acquisition instrument. Because the image quality is not high when the fingerprint image is collected or the fingerprint distortion is caused by uneven force in the process of fingerprint printing, the fingerprint image segmentation is often inaccurate, which brings more difficulties to the subsequent fingerprint identification, resulting in the rejection or misidentification of the fingerprint Automatic identification system, The first key technology after fingerprint collection is to preprocess the collected fingerprint image, including enhancing, binarizing, and thinning the fingerprint image. After the preprocessing is completed, feature extraction can be performed, followed by feature matching to output the matching results, as shown in Figure 2

Figure 2 Fingerprint Image Preprocessing Steps

Finally, the details are extracted and defined as endpoints and bifurcation points (as shown in Figure 3). The endpoint of a ridge is the endpoint of a ridge, while the bifurcation point of a ridge is the point at which a ridge once again separates into two ridges. These two feature points have the highest probability of appearing in fingerprint images, are the most stable, are easy to detect, and are sufficient to describe the uniqueness of fingerprints.

Figure 3 Fingerprint detail node types

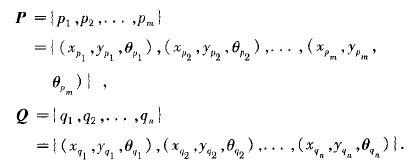

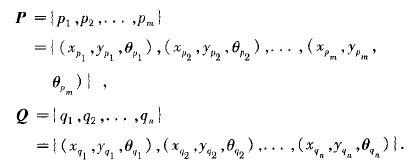

The matching of two fingerprint images mainly solves problems such as rotation, translation, and deformation. In this article, the input for fingerprint matching is a set of two feature points, namely the corpse and Q, where one point set P is extracted from the input fingerprint image and the other point set Q is pre extracted from the standard fingerprint image and stored in the template library. These two point sets are represented as

Among them, three pieces of information about the i-th feature point in point set P are recorded: coordinates, Y coordinates, and direction, while three pieces of information about the jth feature point in point set Q are recorded: x coordinates, y coordinates, and direction. Assuming that two fingerprint images can be perfectly matched, the fingerprint image in the template can be obtained by performing certain transformations (rotation, translation, and scaling) on the input fingerprint image. Therefore, point set P can be approximated to point set Q

In order to convert a feature point in the input fingerprint image into a corresponding position in the template fingerprint image according to a certain transformation method, it is necessary to know the corresponding transformation factor. △ x and △ y are the translation factors in the x and y directions, respectively θ Is the Twiddle factor. The determination of matching reference points is obtained by determining the similarity of these two triangles. After obtaining the matching reference points and transformation factors between two fingerprint images, this paper rotates and translates the identified fingerprint relative to the template fingerprint to determine whether the two fingerprint patterns come from the same finger. In this article, the coordinate position of the feature points of the transformed fingerprint to be recognized and the direction of the ridge in the region are obtained. Then, the transformed fingerprint feature point set to be identified is superimposed on the template fingerprint feature point set to detect the number of overlapping feature points in the two feature point sets. Due to the fact that the matching in this article is an imprecise matching, even if it is a pair of matched feature points, they will not completely overlap and there will always be some deviation in position and direction. Therefore, there must be a certain tolerance for deviation.

Therefore, this article adopts a method called bounding box. For each feature point in the template fingerprint feature point set, a rectangular area around it is selected as its bounding box. As long as the feature points in the transformed fingerprint to be recognized are stacked and fall within this area, and the direction is basically the same, these two feature points can be considered as a pair of matching feature points.

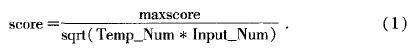

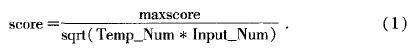

Finally, the algorithm counts the number of all matched feature points and converts them into a matching score using equation (1), where maxscore is the maximum matching score obtained by stacking the number of matched detail points, Temp-Num and Input-Num are the number of detail points in the template and input fingerprint, respectively

The calculated matching score represents the degree of similarity between the two fingerprints compared. The larger the parameter value, the higher the degree of similarity. However, if the score is low, it indicates that this user may not necessarily be the claimed user and access will be denied.

The algorithm used in this article is a typical point pattern matching algorithm based on feature point coordinate models. It conducts in-depth research on the determination of the most difficult step by step reference points and the determination of transformation parameters in the matching process. Based on the mutual relationship between the three neighboring feature points, the reference points are determined and the transformation parameters are obtained. This algorithm can to some extent accelerate the extraction of reference points, thereby improving the speed of the entire matching algorithm. At the same time, the algorithm determines the transformation parameters based on multiple points, rather than a point in the usual sense. To some extent, it can eliminate the position and angle deviation introduced in the feature extraction process, and obtain more accurate transformation parameters.

1.3 Fusion of optical and capacitive sensors

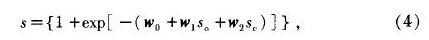

So, Sc are the matching scores obtained by using matching algorithms on images collected by optical sensors and capacitive sensors, respectively. The relationship between the fused scores and S.So, Sc is as follows

Compare S with the set threshold: if: S>threshold, the system allows entry, which is true; Otherwise, the system will reject the user, and of course, the above method can also be used for more than 2,.

According to equation (2), two types of matching score transformations were studied to execute fusion rules. The first type of fusion rules belongs to the so-called fixed fusion rules, as they do not require parameter estimation. In particular, the median matching scores of two types of sensors were studied

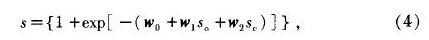

The second type of fusion is called training sample rules, as they require the samples to undergo multiple training sessions in order to obtain an ideal threshold score, using formula (4) to train the samples

In the formula, W0, W1, and W2 are weight vectors. Obviously, the effect of median fusion is worse than that of logical fusion. The process of logical fusion is based on the median, and after multiple iterations, a suitable set of weight vectors (W0, W1, W2) can always be found, making the threshold score S close to the optimal value.

2. Experimental results

Randomly select 20 individuals, each using 3 fingers, namely the thumb, index finger, and middle finger. Use optical and capacitive sensors, press each finger 10 times, and each person will collect 6 fingerprints × 10=60, with a total of 20 fingerprints × 60=1200. For each validation algorithm, the matching score of the two types of sets. The first match is called the "true match score (between real users) G set, and the second match is the" false match score "(" fake user question ") I set.

The above set is randomly subdivided into two sets of the same size: G=G1 U G2, I=I1 U I2, G1, G2 and I1. I2 is a Separated sets of G and I, respectively. The training set Tr={G1, I1} is used to calculate the weights of logical fusion rules, and the testing set Tx={G2, I2} is used to evaluate and compare algorithm performance. It includes the following indicators:

The equal error rate (EER) of the training sample set, which means that the percentage of genuine users rejected by system errors (FRR) is equal to the percentage of fake users accepted by system errors (FAR).

The performance of capacitive sensors is significantly inferior to optical sensors. The main reason is that the contact area of capacitive sensors when collecting images is much smaller than that of optical sensors. This directly results in a small number of detail points being extracted from the collected images, so the extracted detail points cannot match each other correctly.

From the fusion results calculated with equal error rate, the performance has also been greatly improved, with logical fusion reducing EER from 3.6% to 2.9%. The results of the test samples also indicate that fusion improves the robustness of the system. In fact, after logical fusion (row 5 of Table 1), the performance deviation between the training samples (column 2 of Table 1) and the test samples (columns 3 and 4 of Table 1) has been greatly reduced.

Compared with the experimental results of Gian Luca, it was found that the resulting indicators were lower than those in reference [7]. This may be due to the poor performance of the collector used in this paper, resulting in a slightly weaker indicator due to the unsatisfactory quality of the obtained fingerprint image. Additionally, it may be due to the insufficient matching results obtained by the algorithm used in this paper.

3. Conclusion

This article proposes a multi-sensor fingerprint verification system based on optical and capacitive sensors. The experimental results show that the validated multi-sensor system performs better than the best single sensor (optical sensor), and the complementarity between the optical and capacitive sensor matchers also indicates the possibility of multi-sensor fusion. In theory, the system itself has achieved a very low validation error rate. The feature extraction process is applied separately to the images collected by each collection device, and a simple fusion rule is applied to improve the verification performance of the system. Therefore, integrating different types of sensors to improve system performance is a simple and feasible solution.

|

Disclaimer: This article is transferred from other platforms and does not represent the views and positions of this site. If there is any infringement or objection, please contact us to delete it. thank you! |